Week 6

Week 6

Due Dates & Links

- Lab Report 2 Resubmission - Due 10:00 pm Monday February 13, 2023 (Resubmit opens once grades are released)

- Lab Report 3 - Due 10:00 pm Monday February 13, 2023

- Quiz 6 (will be released after class Monday) - Due 9:00 am (just before class) Wednesday February 15, 2023

Lecture Materials

Lecure Videos (Monday)

Video Shorts

The emoji dataset can be found here.

In-class notes

Links to Podcast

Note: Links will require you to log in as a UCSD student

Lab Tasks

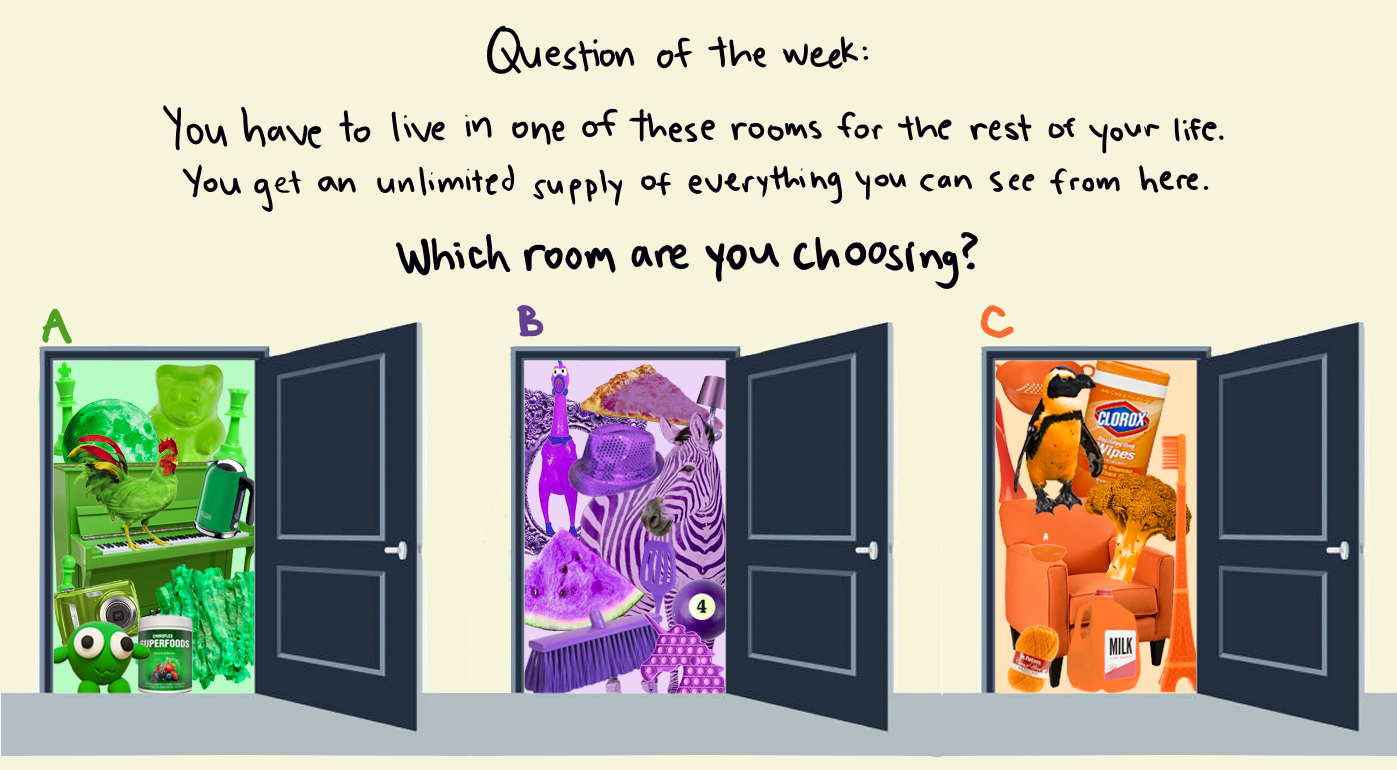

Discuss with your group:

Write down your answers (and why you chose them!) in your group’s shared doc.

In this week’s lab you will write an automatic “grader” for some of the methods we worked on in week 3.

In particular, you’ll write a script and a test file that gives a score to the functionality of a student-submitted ListExamples file and class (see ListExamples.java). The specific format is that you’ll write a bash script that takes the URL of a Github repository and prints out a grade:

$ bash grade.sh https://github.com/some-username/some-repo-name

... messages about points ...

This will work with a test file that you write in order to grade students’ work. You can use this repository to get started with your grader implementation; you should make a fork:

https://github.com/ucsd-cse15l-w23/list-examples-grader

Your Grading Script

Do the work below in pairs—as a pair, you should produce one implementation—push it to one member’s fork of the starter Github repository and include the link to that repository in your notes.

When your script gets a student submission it should produce either:

- A grade message that says something about a score (maybe pass/fail, or maybe a proportion of tests passed – your choice) if the tests run.

- A useful feedback message that says what went wrong if for any reason the tests couldn’t be run (compile error, wrong file submitted, etc.)

A general workflow for your script could be:

- Clone the repository of the student submission to a well-known directory name (provided in starter code)

- Check that the student code has the correct file submitted. If they didn’t, detect and give helpful feedback about it.

- Useful tools here are

ifand-e/-f. You can use theexitcommand to quit a bash script early.

- Useful tools here are

- Somehow get the student code and your test

.javafile into the same directory- Useful tools here might be

cpand maybemkdir

- Useful tools here might be

- Compile your tests and the student’s code from the appropriate directory with the appropriate classpath commands. If the compilation fails, detect and give helpful feedback about it.

- Aside from the necessary

javac, useful tools here are output redirection and error codes ($?) along withif - This might be a time where you need to turn off

set -e. Why?

- Aside from the necessary

- Run the tests and report the grade based on the JUnit output.

- Again output redirection will be useful, and also tools like

grepcould be helpful here

- Again output redirection will be useful, and also tools like

Write down in notes screenshots of what your grader does on each of the sample student cases below.

“Student” Submissions

Assume the assignment spec was to submit:

- A repository with a file called

ListExamples.java - In that file, a class called

ListExamples - In that class, two methods:

static List<String> filter(List<String> s, StringChecker sc)static List<String> merge(List<String> list1, List<String> list2)

- These methods should have the implementations suggested in lab 3

You should use the following repositories to test your grader:

- https://github.com/ucsd-cse15l-f22/list-methods-lab3, which has the same code as the starter from lab 3

- https://github.com/ucsd-cse15l-f22/list-methods-corrected, which has the methods corrected (I would expect this to get full or near-to-full credit)

- https://github.com/ucsd-cse15l-f22/list-methods-compile-error, which has a syntax error of a missing semicolon. Note that your job is not to fix this, but to decide what to do in your grader with such a submission!

- https://github.com/ucsd-cse15l-f22/list-methods-signature, which has the types for the arguments of

filterin the wrong order, so it doesn’t match the expected behavior. - https://github.com/ucsd-cse15l-f22/list-methods-filename, which has a great implementation saved in a file with the wrong name.

- https://github.com/ucsd-cse15l-f22/list-methods-nested, which has a great implementation saved in a nested directory called

pa1. - Challenge https://github.com/ucsd-cse15l-f22/list-examples-subtle, which has more subtle bugs (hints: see

assertSame, which compares with==rather than.equals(), and think hard about duplicates formerge)

Other Student Submissions

After you’re satisfied with the behavior on all of those submissions, write your own. Try to come up with at least two examples:

- One that is wrong but is likely to get full scores

- One that is mostly correct but crashes the grader and doesn’t give a nice error back (and is likely to cause a Piazza/EdStem post saying “the grader is broken!”)

You should create these as new, public Github repositories, so that you can run them using the same grader script by providing the Github URL.

Write down in notes: Run everyone’s newly-developed student submissions on everyone’s grader. That means each team should be running commands like

bash grade.sh <student-submission-from-some-group>

Whose grading script is the most user-friendly across those tests?

Running it Through a Server

We’ve also provided our Server.java and a server we wrote for you called GradeServer.java in the starter repository.

You can compile them and use

java GradeServer 4000

to run the server.

Look at the code to understand the expected path and parameters in GradeServer.java. Loading a URL at the /grade path with one of the repos above as the query parameter. What happens?

That’s quite a bit of the way towards an autograder like Gradescope!

Write down in notes: Show a screenshot of the server running your autograder in a browser.

Discuss and write down: What other features are needed to make this work more like Gradescope’s autograder? (Think about running for different students, storing grades, presenting results, etc)

Congratulations! You’ve done one kind of the work that your TAs do when setting up classes 🙂